Mismatch between model learning and model rollouts

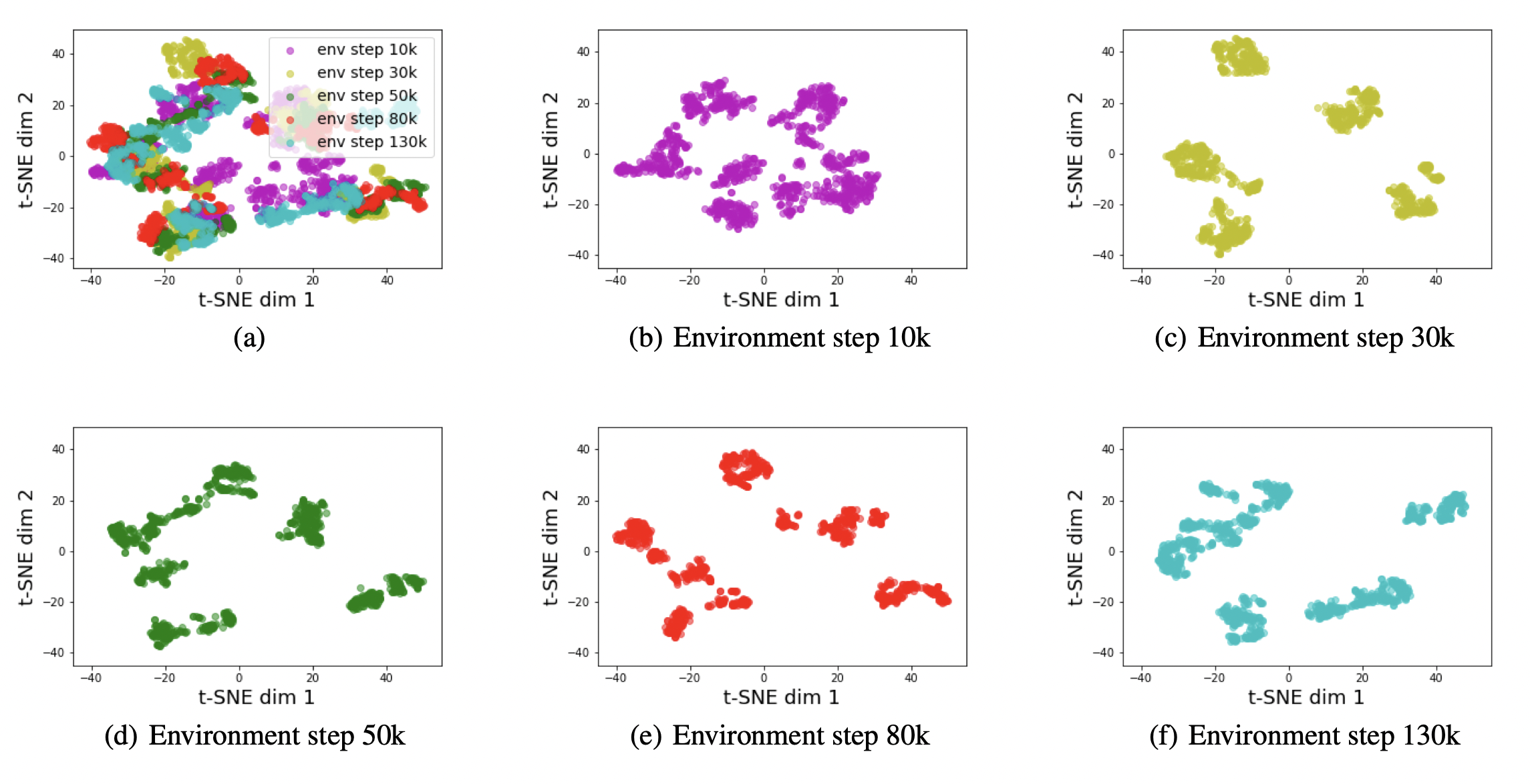

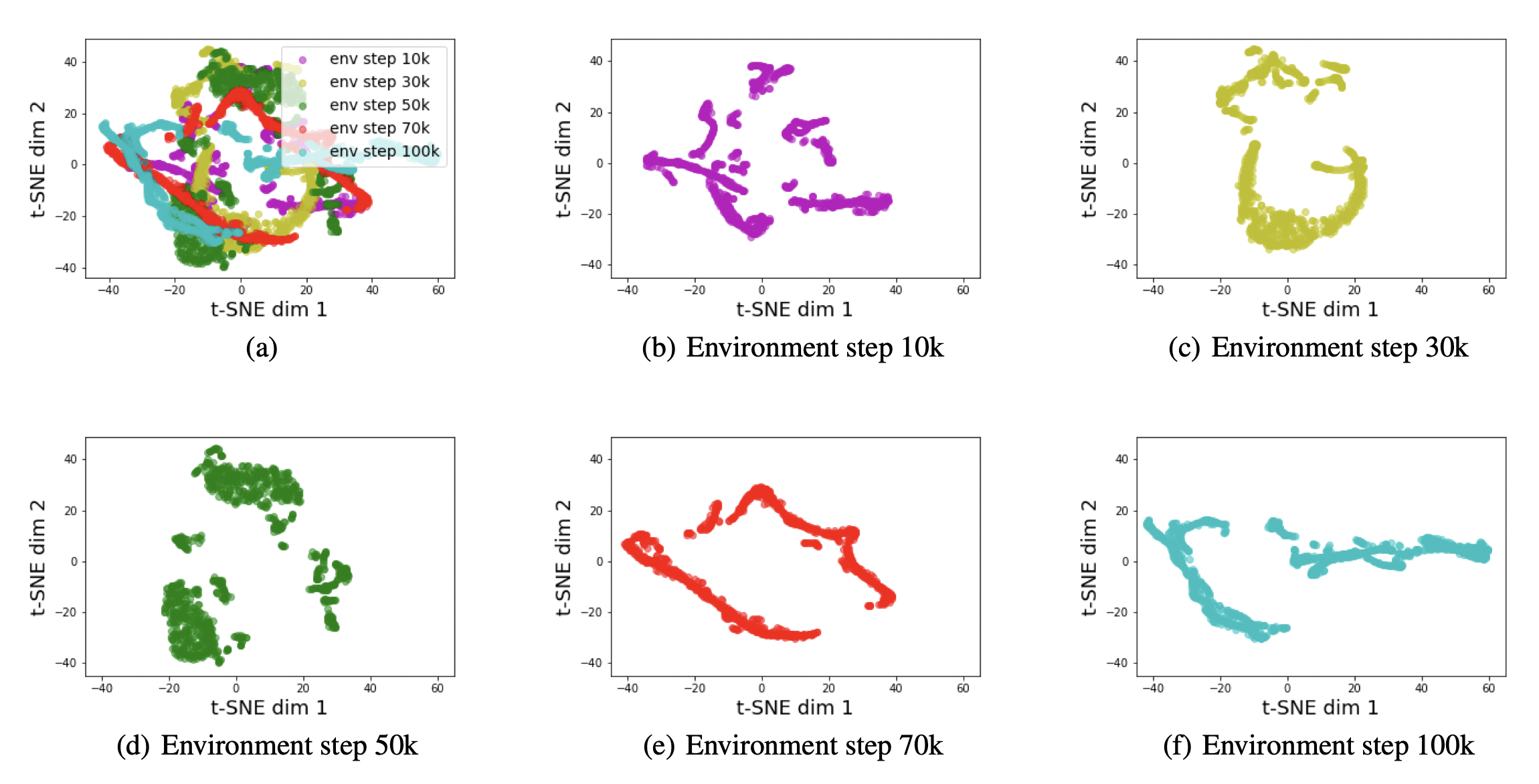

State-action visitation distribution shift during policy learning

The state-action visitation distribution of policies under different environment steps is very different due to policy learning. Leading to a huge state-action visitation distribution shift in the replay buffer.HalfCheetah:

Hopper:

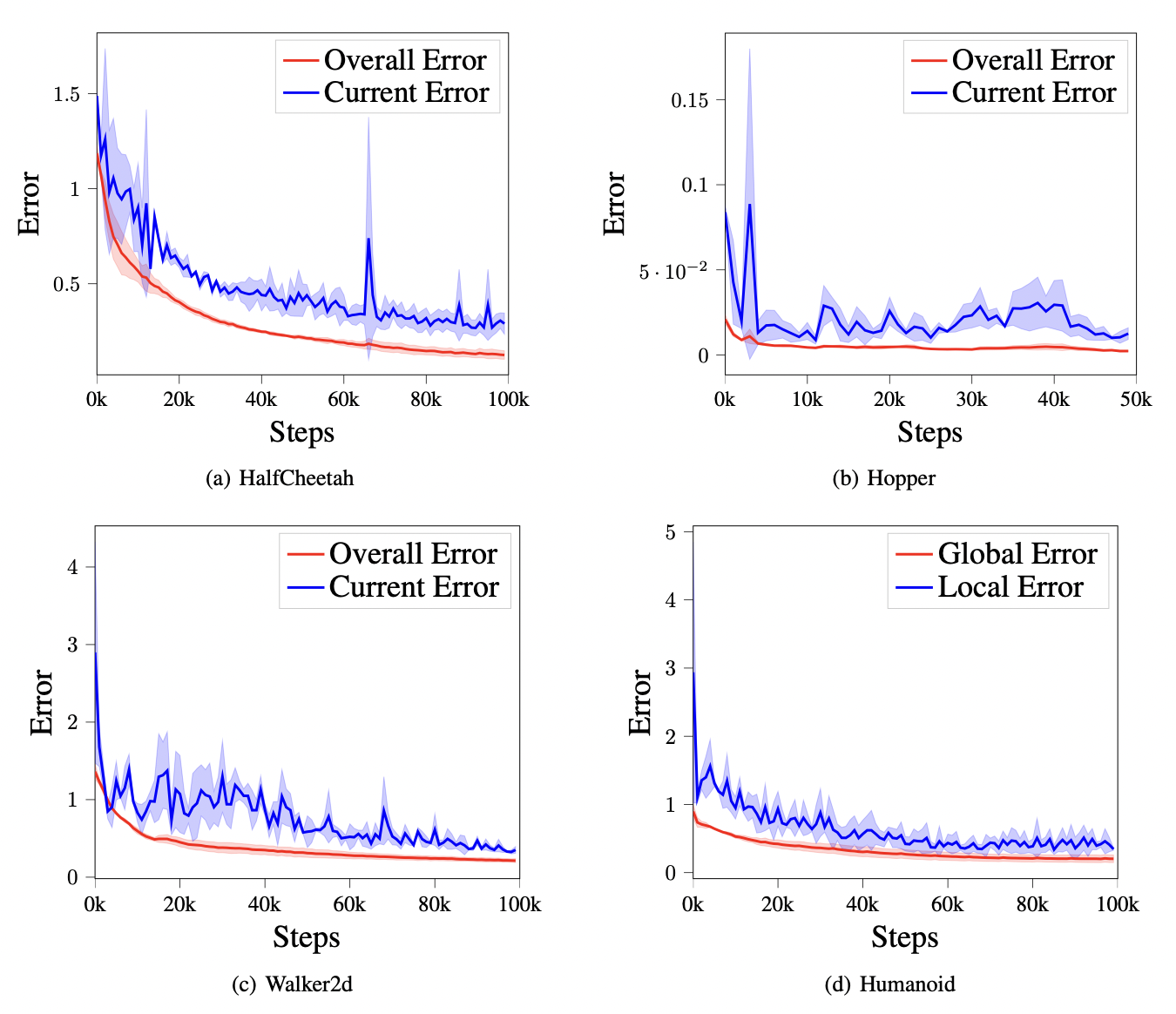

Prediction error curves of MBPO

Overall error means the model prediction error for all historical policies, and current error is the model prediction error for the current policy. We observe that there is a gap between the overall error and the current error. This means although the agent can learn a dynamics model which is good enough for all samples obtained by historical policies, this is at the expense of the prediction accuracy for the samples induced by current policy.